Children end up in child labour as a result of many, often unknown or hidden, interactions between multiple actors and multiple factors within households, communities, and labour systems in both formal and informal economies, leading to unpredictable outcomes for children and other sector stakeholders. The CLARISSA programme was designed to embrace, uncover and work with, rather than against, this inherent complexity in the systems that perpetuate the worst forms of child labour.

Embracing complexity

Within this participatory evidence and innovation generating programme, using Systemic Action Research as its main implementation modality, evaluation is not simply a tool for measurement. Embracing complexity requires moving away from linear and predetermined models of evaluation. With an innovative evaluation design, we have combined different methods to ensure that learning and participation support adaptive management. A series of Learning Notes tells the story of our rich methodological and process learning.

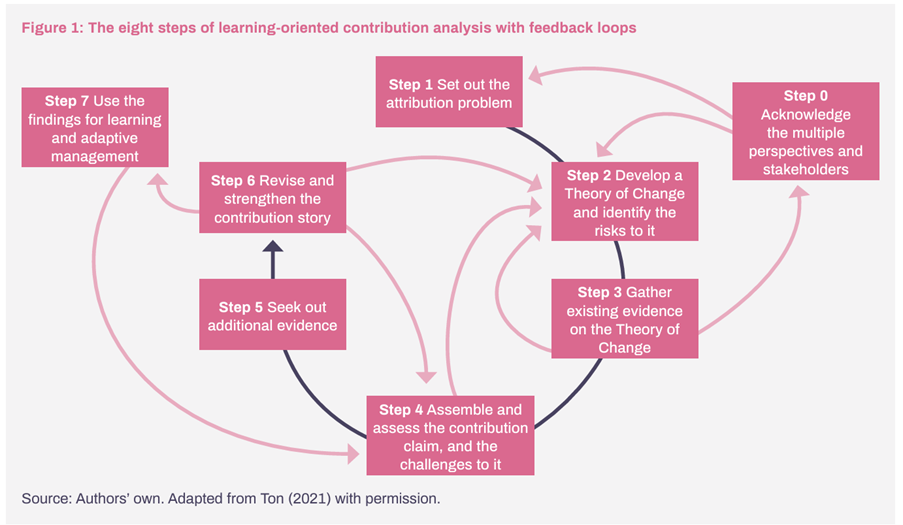

Evaluation is part of the programme’s research agenda, generating evidence, learning and contributing to theory. We value and use multiple forms of evidence, including lived experience and practitioner learning alongside formal published evidence. We described our approach a few years into the programme, based on Contribution Analysis and its seven steps that enable iterative learning.

Evaluation research is structured around three impact pathways and zooms into a number of causal hotspots within them. For a synthetic view of how we understand impact to ripple out from our activities, along spheres of control, influence and interest see our interactive Theory of Change. And for further reflections on our journey with non-linear use of theory of change see here.

Evaluation of each of the impact pathways has been generating evidence and learning that has fed back into implementation. We pay particular attention to the quality of our evidence through applying a set of evidence quality rubrics to build reflexivity and navigate bias.

The findings

In 2024, we will be publishing findings from our main modules:

- How, in what contexts and for whom does Participatory Action Research generate effective innovations?

- Evaluating a social protection intervention to tackle WFCL in Dhaka, Bangladesh

- Does child-led advocacy influence policymakers’ and decision makers’ willingness to meaningfully listen to children and take action that reflects the views, needs and concerns that children share, and how? Are the outcomes effective?

- Did the CLARISSA partnership support a culture for child-centred and adaptive programming?

- How has the participatory adaptive management approach in CLARISSA been operationalized and has it contributed to programme effectiveness?